Will AI replace data engineering?

tl;dr Artificial Intelligence is revolutionizing data engineering not by replacing it, but by enhancing its capabilities, making it more accessible and intuitive. This advancement empowers data engineers to transition from traditional gatekeepers of data to strategic players who leverage AI to drive insights more efficiently.

As we explore the rapidly changing realm of large language models, we're gradually discovering what these advanced tools can really do. Yet, in this race of technology,there’s one field that needs a revolution: data engineering.

So, why the spotlight on this now?

Data engineering hasn’t exactly been the poster child for innovation. It's been the overlooked middle child, always a few steps behind its flashier tech siblings, despite being utterly crucial to how businesses operate.

Recent strides, however, have emerged from cost-effective data warehousing solutions like Snowflake, BigQuery, and Redshift, marking a significant shift towards more affordable cloud data storage and processing.

This is great! But what does this all mean for AI?

The current AI landscape in data engineering

In the rapidly evolving realm of artificial intelligence , we've witnessed a surge of innovations that promise to revolutionise how we interact with data. Among these advancements are tools that enable users to query databases using natural language, turning complex SQL queries into simple conversations. This concept, while dazzling in demos, often encounters practical challenges in real-world applications, particularly when it comes to serving the needs of established businesses and their data teams.

Flashy demo ✨ versus reality

The allure of conversing with databases as if they were knowledgeable colleagues is undeniable. Imagine replacing intricate SQL syntax with straightforward questions, receiving answers accompanied by insightful graphs. These AI-driven tools dissect the database schema, craft SELECT statements, and retrieve data, all behind the scenes.

The reality is more complex than these demos suggests. Each interaction with the database starts from scratch, ignoring the layers of transformation metadata, business logic, and data lineage that give data its true value. It's akin to reintroducing yourself at every turn of the conversation—a process both tedious and inefficient.

Understanding the Occasional versus Everyday User

The core issue stems from a misunderstanding of the target user. While the casual or non-technical employee might benefit from this technology for occasional queries, this solution barely scratches the surface of what data professionals need. Data scientists and engineers, who navigate SQL daily, require tools that go much deeper. They need powerful, integrated solutions that support version control, model generation, and workflow—features that elevate their data manipulation capabilities far beyond the basics.

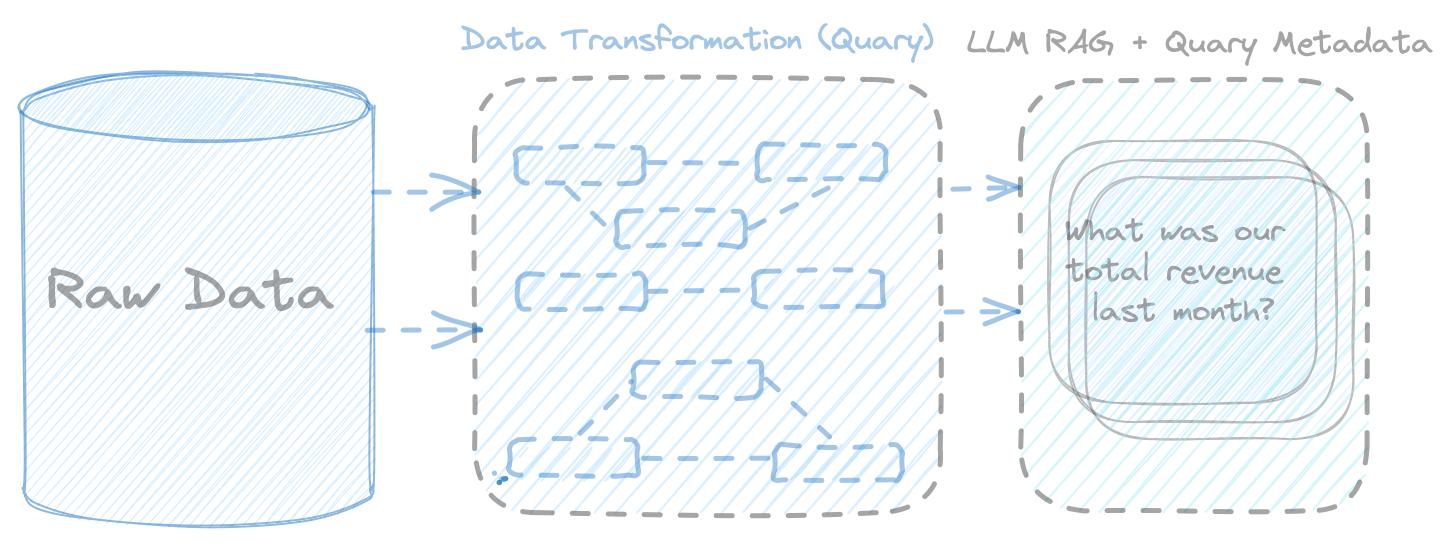

Crafting an enterprise-grade AI data tool

The future of AI in data engineering lies in creating systems that grow smarter, more intuitive, and more valuable over time. This means developing an AI tool that learn from each question, adapting to the unique complexities and evolving needs of every business.

At Quary we are building the foundation for this bridge, constructing a durable, middle layer that not only understands the language of both sides but grows smarter and more valuable over time.

Let's look at a real-world example

Suppose I have a simple DuckDB database containing some raw datasets: raw_customers, raw_orders & raw_payments. Check out the full project here.

'Talk to the database' approach

Let's assume we're interesting in understanding our customer purchasing behavior.

"What is the total number of orders placed by each customer?"

An LLM, fed with a SQL schema dump will give us this fairly primitive answer:

SELECT

customer_id,

COUNT(order_id) AS total_orders

FROM raw_orders

GROUP BY

customer_id;This will give the raw count of orders per customer. However, it's a simplistic view that doesn't account for any nuanced understanding of customer engagement or value. It treats all orders equally, regardless of their size, value, or frequency.

Adding Quary's metadata layer to the mix

Now, let's address the same question but through the lens of our Quary metadata layer, Quary, which enriches raw data with business logic and context:

SELECT

customer_id,

first_name,

last_name,

first_order,

most_recent_order,

number_of_orders,

total_order_amount

FROM customers;Rather than directly querying the source tables, this approach utilizes a model previously crafted by our analysts. This will give us a more nuanced view of each customer's engagement with our business over time. By applying the Quary metadata layer, we're able to interpret the raw data in a way that aligns much closer to business insights.

In data engineering, we firmly believe AI will not replace the field but transform it, making data more accessible and intuitive for all.

Until next time!

Louis